OpenAI’s o3, unveiled on December 20, 2024, is a groundbreaking AI model excelling in reasoning, adaptability, and safety. Designed for complex tasks in coding, math, and science, it features two versions: o3 and o3-mini, with broader o3-mini access planned for January 2025. While its advanced capabilities redefine benchmarks, the high compute cost limits widespread adoption to specialized uses.

OpenAI’s o3 marks a revolutionary advancement in artificial intelligence, combining unparalleled capabilities in logical reasoning, task adaptability, and safety. O3 was introduced as the successor to the o1 model, designed to address complex challenges in coding, mathematics, and science. The model’s name, “o3,” was carefully chosen to avoid trademark conflicts, such as with the UK-based mobile carrier O2. Two versions of the model, o3 and o3-mini, have been developed, with access currently limited to invited researchers focused on safety and security. A broader release of o3-mini is planned for January 2025.

Capabilities and Performance

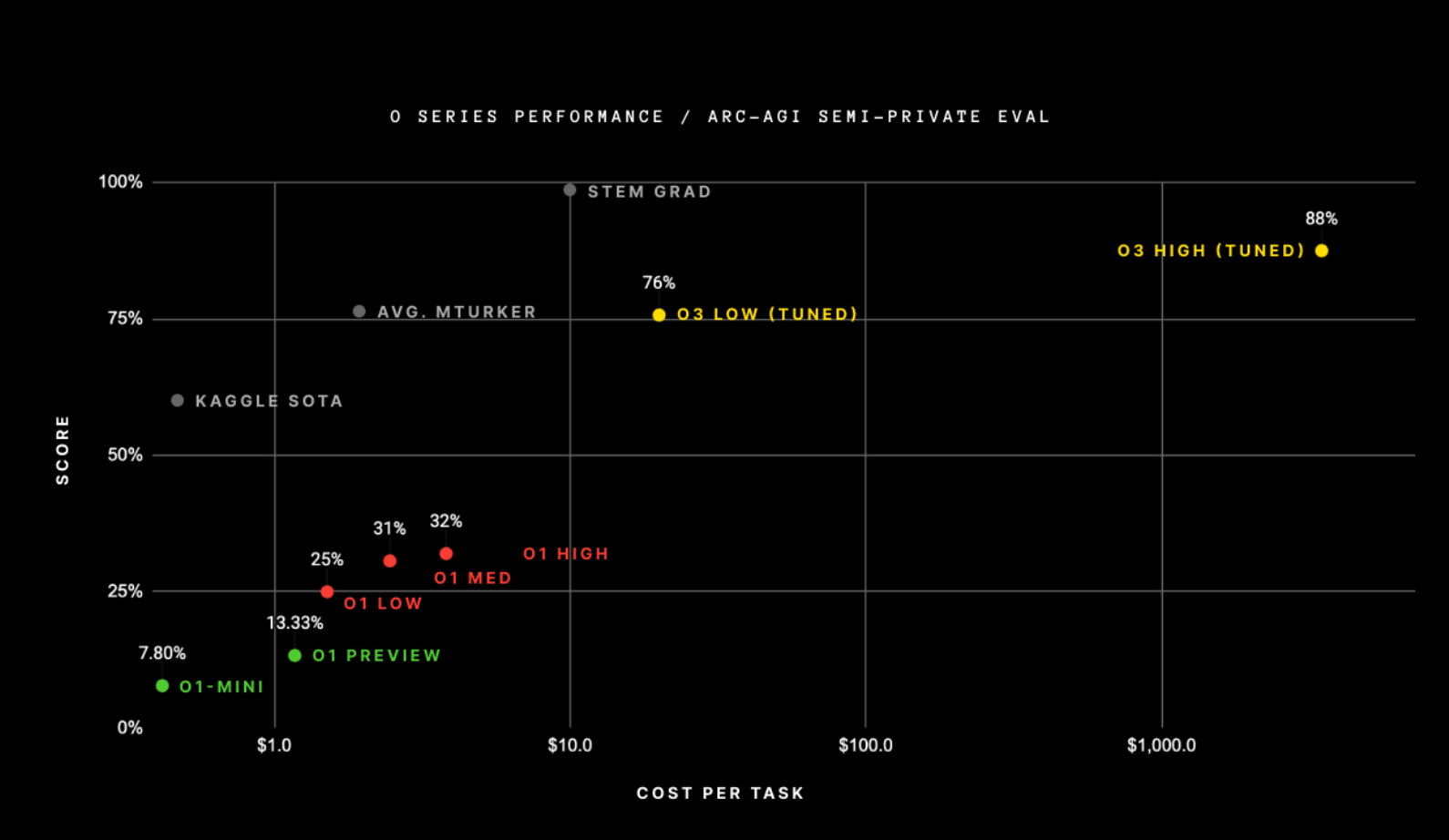

The o3 model demonstrates exceptional performance in handling novel and challenging tasks. It excels at logical reasoning by employing what OpenAI refers to as a “private chain of thought” mechanism, enabling it to plan ahead and solve multi-step problems. This innovation has resulted in impressive scores on benchmarks like the ARC-AGI, which evaluates adaptability and reasoning.

Key Benchmark Results

The table below highlights o3’s performance on the ARC-AGI dataset:

| Dataset | Tasks | Efficiency | Score | Cost/Task | Time/Task |

|---|---|---|---|---|---|

| Semi-Private Eval | 100 | High | 75.7% | $20 | 1.3 mins |

| Semi-Private Eval | 100 | Low | 87.5% | - | 13.8 mins |

| Public Eval | 400 | High | 82.8% | $17 | N/A |

| Public Eval | 400 | Low | 91.5% | - | N/A |

The model’s low-compute configuration achieves impressive scores at reduced efficiency costs, while the high-compute configuration demonstrates breakthrough accuracy but requires significantly more resources.

Innovations in Reasoning

Unlike traditional language models that rely on static knowledge retrieval, o3 employs a dynamic mechanism called natural language program search. This approach enables the model to generate and execute step-by-step plans, or “Chains of Thought” (CoTs), for solving tasks. The ability to adapt knowledge dynamically during inference allows o3 to handle tasks it has never encountered before, making it a game-changer in the field of AI.

The Economics of OpenAI’s o3

OpenAI’s o3 model has set a new standard in AI performance, demonstrating remarkable results on benchmarks like ARC-AGI. However, this progress is closely tied to the use of test-time scaling, an approach that leverages significant computational resources during the inference phase to improve the quality of outputs. While test-time scaling has proven effective in achieving record-breaking accuracy, it has also raised critical questions about cost, scalability, and practical applications.

Test-time scaling involves techniques such as running multiple chips simultaneously, using advanced inference hardware, or extending computation time—sometimes up to 10–15 minutes per query. These methods enable o3 to deliver highly accurate responses, but they also make the model substantially more expensive to operate. For example, o3 achieved an 88% score on ARC-AGI, far surpassing the 32% achieved by its predecessor, o1. However, this came at a cost of over $1,000 per task in its high-compute configuration.

The Cost of o3 Compared to Previous Models

The table below provides a detailed cost analysis of o3 compared to its predecessors:

| Model | ARC-AGI Score | Compute Cost/Task | Relative Compute Usage |

|---|---|---|---|

| o1-mini | 32% | ~$0.05 | Baseline |

| o1 | 32% | ~$5.00 | 100x |

| o3 (Efficient Mode) | 76% | ~$20 | ~40x |

| o3 (High Compute) | 88% | >$1,000 | ~170x |

As the table illustrates, the high-compute version of o3, while delivering top-tier performance, is far more expensive than its predecessors. Even the efficient mode of o3, which reduces the cost to ~$20 per task, remains significantly costlier than o1 for comparable outputs.

Practical Applications and Limitations

Given its high operational costs, o3 is unlikely to become a tool for everyday tasks or casual users. Instead, it is better suited for specialized, high-stakes applications where the additional cost is justified by the value of accuracy and depth. For example:

- Strategic Decision-Making: Enterprises, such as sports teams or financial institutions, could use o3 for long-term planning and complex problem-solving.

- Scientific Research: Research institutions might leverage o3 to tackle advanced scientific questions that require multi-step reasoning.

However, o3’s limitations must be acknowledged. Despite its advanced reasoning capabilities, the model still struggles with some tasks that humans handle effortlessly. Moreover, the high-compute costs make it inaccessible to most individuals and small businesses.

Challenges and Opportunities

The success of o3 underscores the potential of test-time scaling but also highlights several challenges that must be addressed:

-

Hardware Innovation:

- Startups like Groq and Cerebras are developing more efficient AI inference chips, which could help reduce the costs of test-time scaling.

- Companies like MatX are focusing on creating cost-effective hardware to make high-performance models more affordable.

-

Balancing Cost and Performance:

- OpenAI has demonstrated a trade-off between cost and accuracy with o3’s efficient mode. Future iterations may focus on optimizing this balance to make such models more practical.

-

Economic Viability:

- OpenAI has explored premium subscription tiers, ranging from $200 to $2,000 per month, to provide access to high-compute models. These tiers could cater to enterprise clients while ensuring sustainability.

Challenges and Future Directions

While o3’s capabilities mark a significant milestone, the model is not without limitations. High computational costs, coupled with its occasional failure to solve simpler tasks, underscore areas for improvement. OpenAI plans to address these challenges with future benchmarks, such as ARC-AGI-2, launching in 2025. This new benchmark aims to raise the bar for AI adaptability and safety while fostering open-source contributions to advance the research community.

Conclusion

The OpenAI o3 model is a groundbreaking achievement in artificial intelligence, blending enhanced reasoning capabilities with robust safety mechanisms. Its ability to dynamically adapt to novel tasks represents a paradigm shift in AI technology, moving beyond static knowledge retrieval to true generalization. While not yet AGI, o3 sets a strong foundation for future developments in general-purpose AI. With ongoing research and improvements, OpenAI continues to push the boundaries of what AI can achieve while ensuring alignment with human values. While o3 is a powerful tool for specific high-impact applications, its economic model currently limits widespread adoption.

Looking forward, the industry must address these challenges by innovating hardware, optimizing compute efficiency, and exploring cost-effective scaling solutions. OpenAI’s o3 is a testament to the potential of test-time scaling, but its true impact will depend on how the AI community navigates the balance between performance and cost.