Let’s put some of the topics we learned in previous blog together. Imagine you’re designing a system that is read-heavy – for example, a product catalog API that gets 100 reads for every 1 write, or a popular blog site where content is written once but read many times. Such a scenario calls for optimizations to handle a high volume of read traffic efficiently. Two common strategies are caching and replication. We’ve covered replication; now we introduce caching (with Redis) and outline a design that leverages both.

The Power of Caching (Redis)

A cache is a high-speed data store (often in-memory) that sits between your application and the primary database. Redis is a popular open-source in-memory data store often used as a cache. The idea is to store frequently accessed data in Redis so that subsequent requests can get it from memory quickly, without hitting the slower disk-based database each time. Caching can drastically reduce latency for repetitive reads and reduce load on your database.

Cache-Aside Pattern (Lazy Loading): The most common caching strategy is called cache-aside.

The application checks the cache first when a read request comes in:

-

Cache hit: If the data is found in the cache, return it immediately – the database is bypassed, which is a big win for performance.

-

Cache miss: If the data is not in the cache, then query the database (this will be slower, but it’s only on a miss). Then populate the cache with that result, so that next time it will be a hit. Finally, return the data to the user.

By doing this, you ensure that data which is frequently requested ends up being served from the fast cache after the first miss. Less-frequently accessed data might not be in cache, but that’s okay – you only pay the cost on those infrequent requests.

Let’s illustrate this in code with a simple example using Redis (in pseudocode/Python for clarity):

import redis

r = redis.Redis(...configure connection...) # connect to Redis

def get_product_details(product_id):

cache_key = f"product:{product_id}"

# 1. Try cache first

cached_data = r.get(cache_key)

if cached_data:

# Cache hit

return deserialize(cached_data)

# 2. Cache miss: query the primary database (e.g., PostgreSQL)

product = db_query_product_by_id(product_id)

# Store the result in cache for next time, with an expiration time for safety

r.set(cache_key, serialize(product), ex=300)

return productIn this snippet, db_query_product_by_id represents the call to your database to fetch the product info. We then store it in Redis with a key like "product:1234" and perhaps set an expiration time (TTL) of 5 minutes (300 seconds) to avoid keeping stale data forever. The next call within 5 minutes will find it in Redis and skip the database.

Cache invalidation: One tricky aspect with caches is keeping them in sync with the database. If the underlying data changes (say, a product price is updated), the cache might serve old data until it’s refreshed or expired. Strategies to handle this include:

-

Setting a reasonable TTL (time-to-live) on cache entries so they expire and get reloaded periodically (as done above).

-

Proactively invalidating or updating the cache when a write happens. For example, after updating the product price in the DB, you could

DEL product:1234from Redis or update it with the new value. This requires your application to have hooks on writes to clear the relevant cache keys (write-through or write-behind caching strategies). -

In some scenarios, it’s acceptable that stale data is shown for a short time (like a few seconds) – this is the price for extreme read efficiency. For instance, a count of likes might lag slightly behind the actual count. Each system must decide the tolerance for staleness.

Redis as a cache is super fast (in-memory operations, often completing in <1 millisecond). It can handle hundreds of thousands of ops per second easily. By offloading frequent reads to Redis, your primary database is freed up to handle writes or less frequent complex queries.

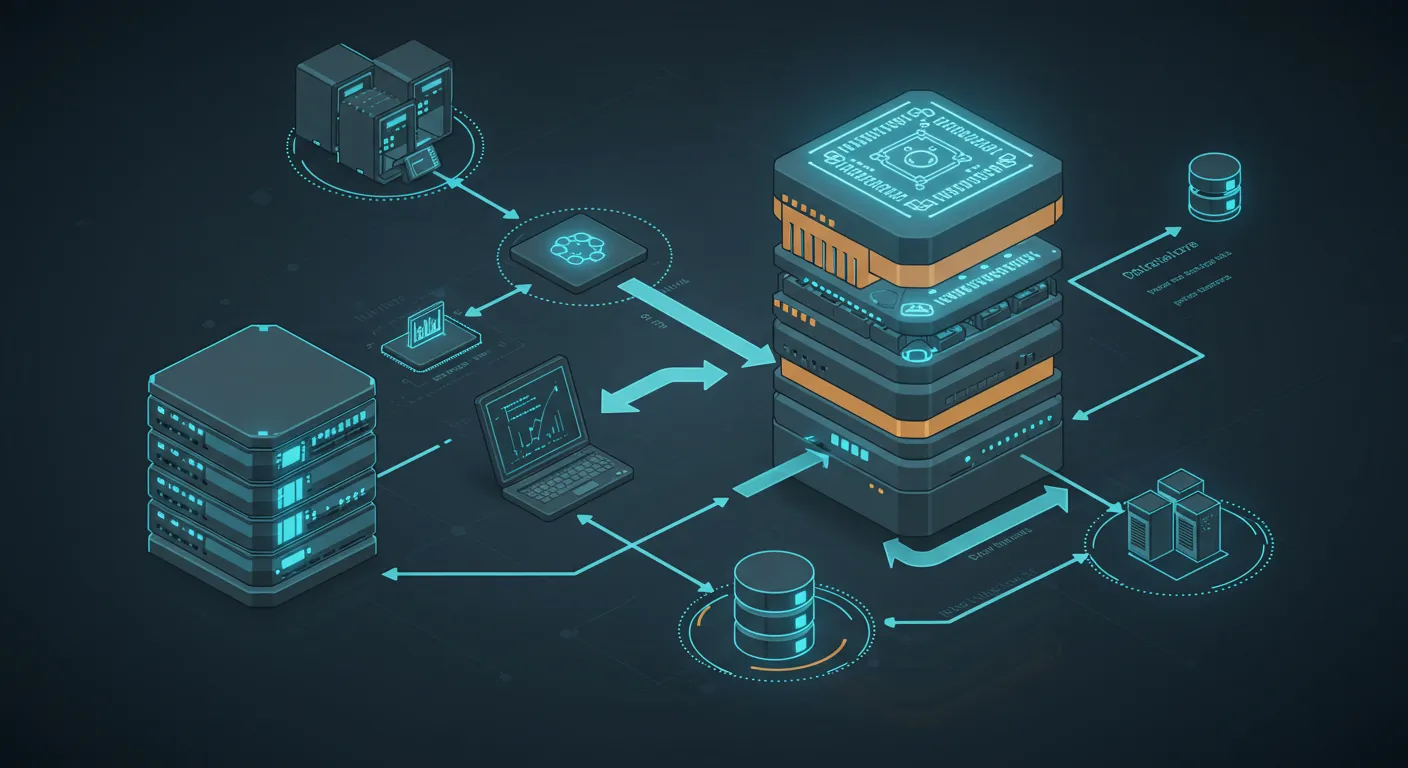

High-Read Architecture: Combining Replication and Caching

For a high-read, moderately-write system, here’s a common architecture that uses both replication and caching:

-

Primary Database (PostgreSQL): This is the source of truth. All writes (inserts, updates) go here. The primary might also handle some reads, but we aim to reduce its load.

-

Read Replicas: We set up one or more replicas of the Postgres primary. These replicas continuously apply the primary’s changes. Our application can direct read-only queries to these replicas. For example, analytical queries or any endpoints that are read-heavy and can tolerate slight replication lag go to a replica. This immediately multiplies read capacity (if you have 3 replicas, you roughly quadruple read throughput assuming even distribution including primary).

-

Redis Cache Layer: In front of the database queries, we incorporate Redis as described. The application first checks Redis for cached results. We might cache the results of specific expensive queries or the output of constructing API responses. For example, if users frequently request the “top 10 products” list, that could be cached. Or each product detail could be cached individually as we showed. The cache can store fully rendered JSON responses or just database query results, depending on the use case.

How a request flows: When a user’s request comes in for data, the application does: check Redis -> if miss, query a database (possibly a read replica) -> then store result in Redis -> return to user. By doing this, if the same or another user hits the same endpoint again, the data comes straight from cache.

If the data is not found in cache and we go to the DB, ideally we hit a read replica (so the primary isn’t burdened). The read replica returns the data (slightly slower than cache but still typically on the order of tens of milliseconds), then we cache it. If the data was recently updated, there’s a small chance the replica hasn’t got the latest write yet (replication lag). In such cases, we might momentarily serve slightly stale data. Depending on requirements, one could choose to always read from primary when caching (to ensure freshest data in cache), or one could accept eventual consistency. Often, critical reads (after a recent write that the user is expecting to see) are directed to primary, and more generic reads go to replica.

Scaling out: This combination of caching and replication can handle very large read volumes. The cache handles the hottest data almost entirely in memory. The replicas handle other read traffic in parallel. The primary handles the writes. You can add more replicas if read load grows (horizontal scaling for reads). You can also scale the Redis cluster if needed (Redis itself can be clustered or just use a stronger box).

Use case example: Think of Twitter’s timeline. Each user’s home feed could be cached (so if they refresh, it’s not regenerated each time from scratch). The primary database stores tweets, and there are read replicas to serve timelines or search queries. Redis might store the timeline for a short period so that if the user refreshes within, say, 30 seconds, it doesn’t hit the database again. They also use other caching like Memcached. This general idea pops up everywhere high scale is needed: Cache what you can in memory, replicate your data for read throughput, and reserve the main database for the heavy lifting that only it can do (like maintaining consistency on writes).

Important considerations:

-

Ensure cache consistency to a level your application needs (through TTL or invalidation on writes).

-

Monitor cache hit rates. A low hit rate means either you’re caching the wrong things or the TTL is too low or the cache is too small. You want a high hit rate (e.g., >90%) for the cache to be really effective for hot data.

-

For replication, monitor lag. If lag grows (maybe the replica is falling behind due to heavy load), you might need to beef up replica hardware or add another replica to share load.

-

Have a strategy for failover: if the primary DB dies, one of the replicas should take over (this can be set up with automatic failover). Also, if Redis cache fails (it’s usually in-memory and not as durable, though Redis can be made persistent or clustered), your system should still function (just a bit slower). Essentially, the system should be resilient: any single component failure should not take the whole system down. The combination of replication and caching helps here – cache is usually non-critical (a luxury that if down, just means queries go to DB), and replication provides backups for the DB.

In summary, a high-read architecture will serve most reads from fast, scalable sources: first the cache (fastest), then replicas (fast and scalable), and lastly fall back to primary if needed. Writes go to primary and propagate out. This design can handle dramatically more traffic than a single database server alone, and it’s a common blueprint for web applications, APIs, and microservices in the real world. By mastering these strategies – caching frequently accessed data, replicating databases, and sharding when data grows – you as a developer can ensure your application’s data layer is robust, scalable, and performant.

Conclusion

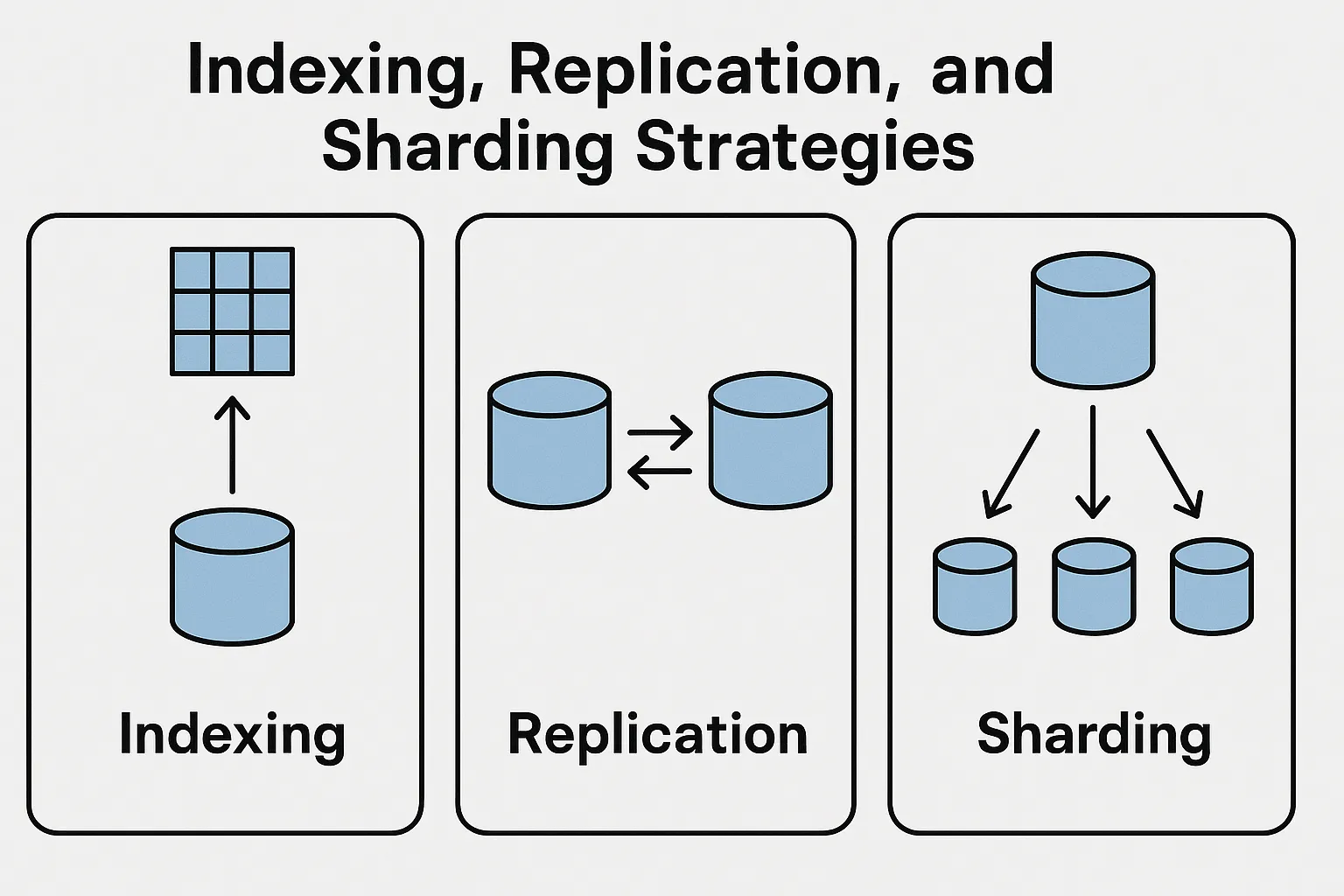

In this blog series, we covered a lot of ground in data storage and database systems. We contrasted relational vs. non-relational databases, learning how SQL databases enforce schemas and relationships while NoSQL offers flexibility and horizontal scale. We saw how data modeling differs between the two paradigms, with normalization in SQL and use-case-driven schema in NoSQL (embedding vs. referencing). We clarified the CAP theorem, explaining why distributed systems can’t have it all and how that leads to different consistency models like strong and eventual consistency. We also delved into practical scaling techniques – using indexes to speed up queries, replication to distribute load and ensure high availability, and sharding to partition data across servers for massive scale. Finally, we put it all together in a scenario designing a high-read system with Redis caching and replicated databases, demonstrating how these concepts play out in a real-world architecture.

The key takeaway is that database design is not one-size-fits-all. Each decision – SQL vs NoSQL, how to model data, how to ensure consistency, where to add indexes, whether to replicate or shard – depends on the application’s requirements and usage patterns. As you gain experience, these become tools in your toolbox. Need flexibility and quick iteration? Maybe start with a schemaless store. Need absolute consistency for transactions? A relational database with strong ACID guarantees is the way. Scaling reads? Add caching and replicas. Huge scale writes? Shard the data.

Remember, a well-chosen and well-tuned database strategy can make the difference between a snappy app and one that crumbles under load. Happy coding, and may your queries be ever fast and your systems ever scalable!

References:

-

Cache-aside pattern steps