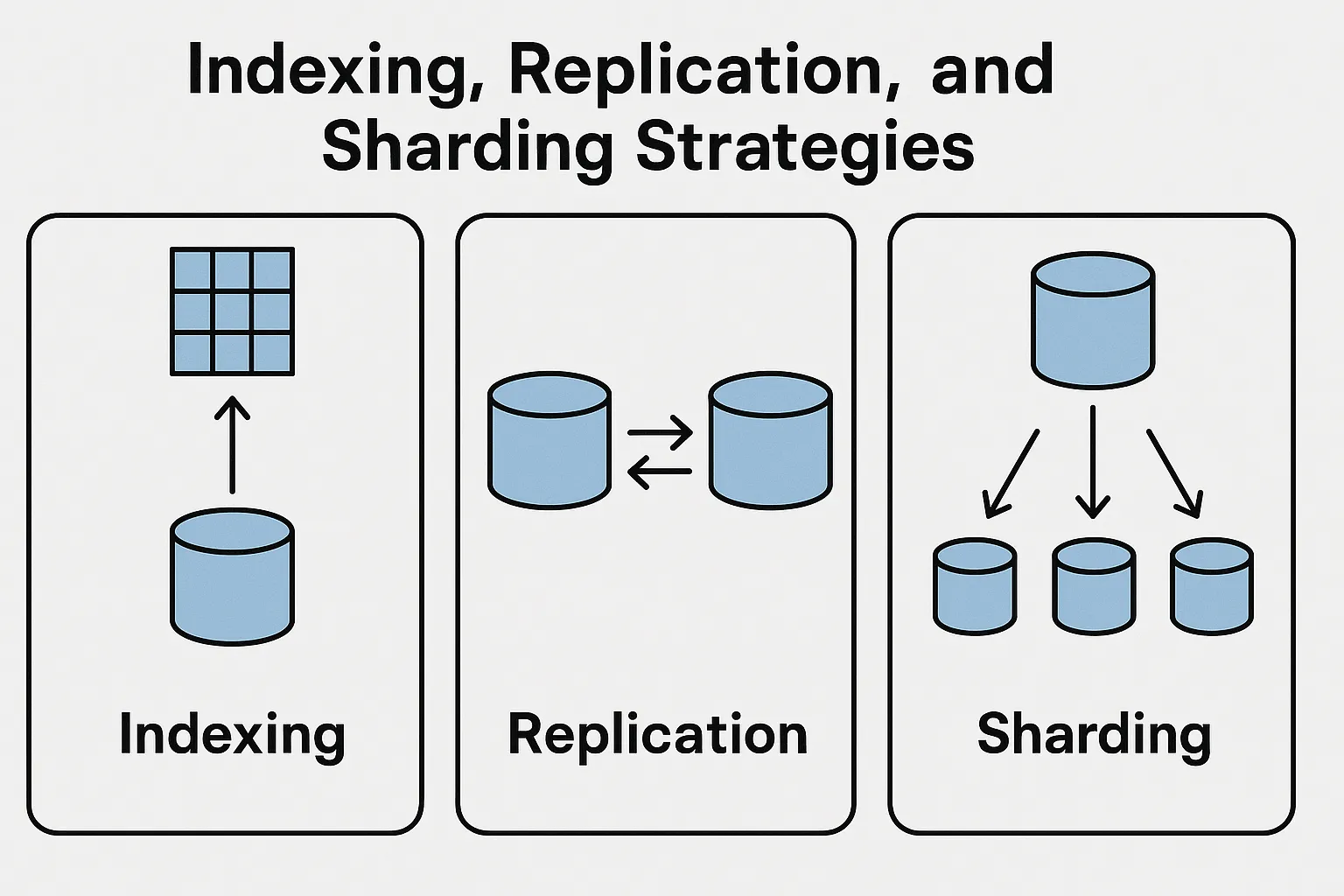

In previous blog we’ve covered data models and consistency, let’s discuss how to make databases performant and scalable. Three key techniques are indexing (for query performance), replication (for reliability and read scaling), and sharding (for horizontal scaling of write workload and data volume). These are not mutually exclusive – they are often used together in large systems.

Indexing

An index is a data structure that helps speed up data retrieval on specific columns (or fields). In relational databases, an index is often a B-tree (or variants like B+tree) that allows binary search-like efficiency instead of scanning every row. In PostgreSQL, for example, if you query SELECT * FROM orders WHERE customer_id = 123;, having an index on orders(customer_id) means the database can jump directly to the entries for customer 123 instead of reading the entire orders table. This can make a huge difference: without an index, the database does a sequential scan through potentially millions of rows with an index, it performs a much faster lookup, resulting in millisecond-range query times even on large tables.

Creating an index in SQL is straightforward. For example, to index the customer_id in the orders table:

CREATE INDEX idx_orders_customer ON orders(customer_id);After this, queries filtering by customer_id will use the index to find matching rows quickly. Behind the scenes, PostgreSQL maintains the index as data is inserted/updated (which does add some overhead to writes, since the index needs updating too).

NoSQL databases like MongoDB also support indexes. In Mongo, you’d do:

db.orders.createIndex({ customer_id: 1 });This creates an index on the customer_id field in the orders collection (the 1 indicates ascending order index). MongoDB’s query engine will use this index for queries like find({customer_id: 123}). In fact, indexing in Mongo is very similar conceptually to SQL – it even uses B-trees by default.

When to index: You generally add indexes on fields that are frequently used in query filters, join conditions, or sorting. A primary key is usually indexed by default (ensuring fast lookup by ID). Be careful not to over-index: each index consumes disk space and slows down writes. It’s a trade-off: more indexes = faster reads (queries), but slower inserts/updates and more storage usage. Use tools like EXPLAIN in PostgreSQL to see if a query is using an index or not, and profile your database workload to decide which indexes truly help.

Example: If we often query orders by product name, an index on orders(product) makes sense. But if we never query by amount, an index on amount would be unnecessary overhead. In practice, start with indexes on key search fields and primary keys, then monitor and add indexes as needed for slow queries.

Replication

Database replication means keeping copies of your data on multiple nodes (servers). The most common form is master-slave (primary-secondary) replication: one primary node receives all writes, and one or more secondary nodes replicate those changes (usually asynchronously, but can be synchronous) and are used for reads or standby. The motives for replication are: high availability (if the primary goes down, a secondary can take over) and read scaling (many reads can be spread across replicas, reducing load on the primary).

In PostgreSQL, replication can be set up so that you have one primary and multiple read replicas. The primary logs all changes (WAL – write-ahead log), and replicas continuously apply those logs. If the primary fails, you can promote a replica to become the new primary. Many cloud setups (Amazon RDS, etc.) automate this failover. From a developer perspective, you might connect your app to the primary for writes and to a replica for read-heavy endpoints. Keep in mind replication lag – there’s typically a small delay, so a replica might be a few seconds behind the primary. This is usually fine for eventually-consistent reads (e.g., an analytics query or reading someone else’s updates slightly behind real-time), but not for, say, reading your own recent write (which is why many frameworks allow read-after-write on primary to ensure consistency for that user’s action).

MongoDB’s approach is built-in via replica sets. A Mongo replica set consists of one primary and N secondaries. The driver (client library) will automatically send writes to primary and distribute reads (if you configure it to use secondary reads). MongoDB will handle automatic failover – if primary dies, secondaries elect a new primary among themselves. Mongo’s replication is usually asynchronous (primary writes, secondaries catch up), so it’s also eventually consistent for secondary reads.

Benefits of Replication:

-

Fault tolerance: If one node goes down, the data is still available on others (no single point of failure).

-

Scaling reads: You can have, say, 5 replicas and handle 5x the read queries (approximately) by load-balancing reads. This is great for read-heavy workloads.

-

Geographical distribution: You can place replicas in different regions (e.g., one in US, one in Europe) so that local users get faster read access from a nearby replica. Writes still go to primary (which might be in one region), but reads can be served locally.

Trade-offs:

-

Replication doesn’t help write throughput, since every write still has to go through the single primary (vertical scaling or sharding is needed for write-scaling).

-

There is complexity in ensuring all replicas are consistent and dealing with lag. If a replica is slow or network is partitioned, it might fall behind. If it’s too far behind, it might be automatically taken out of rotation until caught up (to avoid serving very stale data).

-

Writing to multiple replicas (multi-master replication) is another approach (like MySQL NDB Cluster or some multi-primary NoSQL systems), but those introduce conflict resolution issues. Most setups use one primary at a time to avoid write conflicts.

From a developer viewpoint, once replication is set up, you mostly just need to be aware of any lag if your reads go to replicas. Some ORMs or query routers allow specifying read-preference (primary vs replica). For instance, in a Node.js app with Mongo, you can do readPreference=secondaryPreferred for certain queries that can tolerate eventual consistency.

Sharding (Partitioning)

Sharding is the technique of partitioning your data across multiple servers so that each server (shard) holds only a subset of the total data. If replication is about copies for redundancy, sharding is about slices for scalability. Each shard is often a replica set in itself in modern systems (so you shard for scale and also replicate each shard for resilience – these can be combined).

There are different strategies for sharding:

-

Key-based (Hash) Sharding: You choose a shard key (like user_id, or some hash of an identifier) and use a hash function to distribute records across shards. For example, with 4 shards, user_id 1000-1999 might go to shard1, 2000-2999 to shard2, etc., or a hash mod 4 of the key decides the shard. This aims to evenly distribute data. MongoDB lets you specify a shard key on a collection, and it will split data into chunks and distribute across shards.

-

Range Sharding: Each shard holds a continuous range of the data. For instance, shard1 has IDs 1-1,000,000, shard2 has 1,000,001-2,000,000, etc. Range sharding can be efficient for range queries (e.g., dates), but if the data or access is skewed (say most new data goes to the highest range), one shard can become a hotspot.

-

Geographic or Attribute-based Partitioning: Sometimes you shard by a particular attribute, like region. For example, user records might be partitioned such that European users are on a Europe shard, American users on US shard. This can reduce latency for users and comply with data residency, but it’s essentially a manual partition by a field.

Sharding addresses the limitation of a single machine’s resources. If your dataset is 10 TB, far too large for one server’s disk or memory, you can shard it into 10 machines with 1 TB each. Similarly, if you need to handle 100k writes per second, you can divide the load among multiple shards (10k/sec each, for instance).

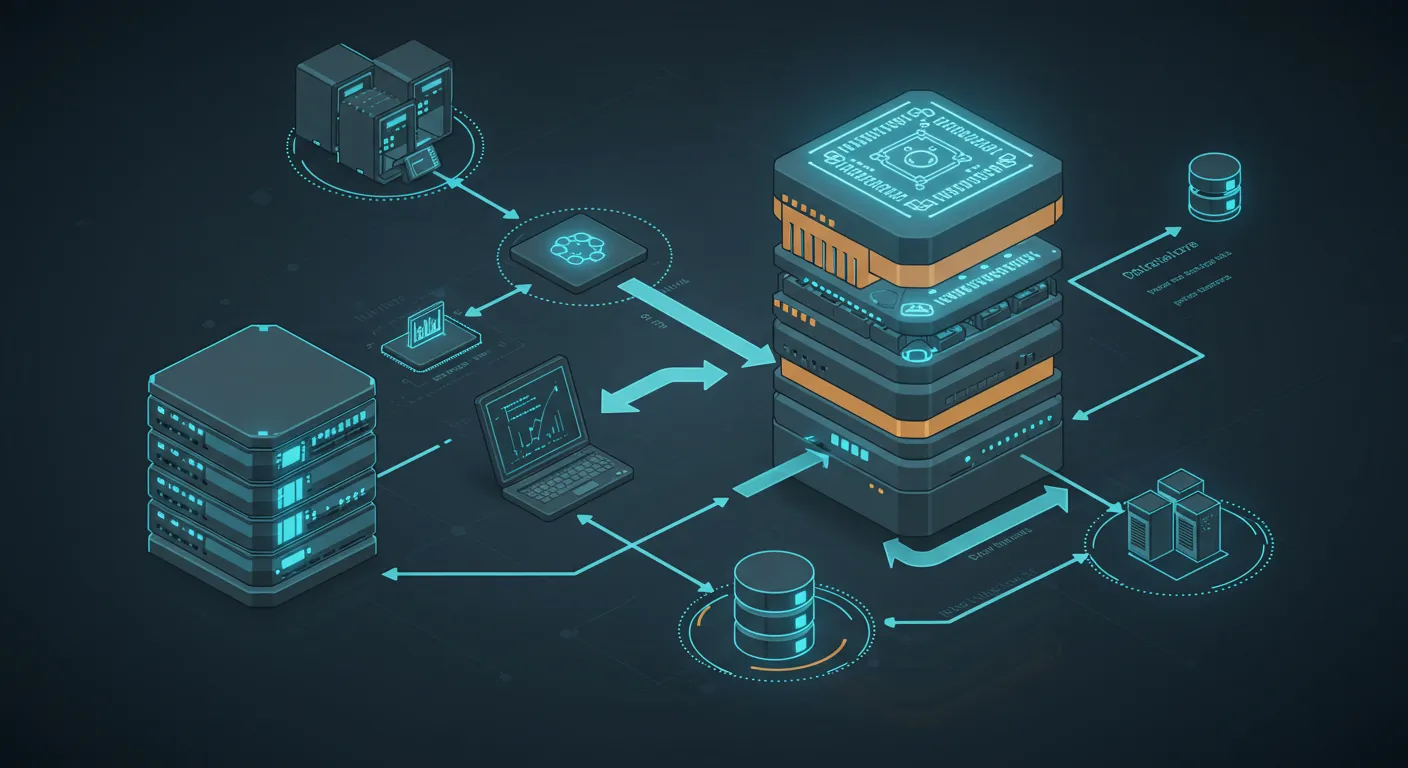

MongoDB Sharding: In MongoDB’s architecture, there is a component called mongos (router) that knows how to route queries to the correct shard. The data is partitioned by shard key, and a config server keeps metadata of which chunk of data lives where.

Each shard is typically a replica set (to have both sharding and replication).

So a production Mongo cluster might have: config servers, query routers (mongos instances), and multiple shards (each shard = primary + secondaries). When the application queries Mongo, it goes through mongos which forwards the query to the appropriate shard(s). If you query by the shard key, it can go to the specific shard that has that key. If you do a broad query not keyed by the shard key, the router may broadcast it to all shards and aggregate results (which is slower). So designing a good shard key that evenly distributes data and matches your common query patterns is crucial.

Sharding in SQL: Traditional SQL databases were not trivially sharded – they assumed one big machine. However, modern approaches and extensions exist: for example, PostgreSQL can be sharded using table partitioning (which is more manual) or solutions like Citus (an extension that distributes PostgreSQL across nodes). In these, you often pick a distribution key for a table, and the system splits data into shards stored on different nodes. There’s no built-in query router in vanilla Postgres, but Citus provides one, or you handle it at the application level. There are also frameworks and proxies (like Vitess for MySQL) that handle sharding behind the scenes for SQL. The takeaway is: it’s doable, but not as out-of-the-box as with many NoSQL systems that assumed sharding from day one.

Challenges with Sharding:

-

Complexity: You now operate a cluster of databases instead of one. Monitoring, backups, and operations are more involved.

-

Rebalancing: If one shard becomes too full or hot, you might need to reshard or add more shards. Moving data between shards (rebalancing) can be tricky and time-consuming. MongoDB auto-balances chunks across shards, but in some systems you have to do manual data migrations.

-

Joins and Transactions: In a sharded environment, cross-shard joins or multi-shard transactions are hard. If your query needs data from two shards, the system (or your application) must gather from both and combine. Some databases support distributed transactions across shards, but it can be slow or limited. Often, schemas in sharded systems are designed to avoid the need for cross-shard operations (e.g., choosing a shard key that groups related data likely to be accessed together on the same shard).

-

Partition tolerance vs. consistency: With shards, if a shard (or its network) is unreachable, part of your data is unavailable. The system might choose to still operate with remaining shards (meaning some data can’t be fetched until that shard is back). If high availability is needed, each shard should be replicated (so that a single node failure in that shard doesn’t make the shard unavailable).

To sum up, replication is about copying data for reliability and read throughput, while sharding is about splitting data for capacity and write/read scaling. They solve different problems and are often used together: you might shard a huge dataset across 10 servers, and also replicate each shard to another server for backup. That gives you horizontal scale and high availability (this is essentially how large cloud databases operate).

References:

-

Indexing benefits for query performance

-

Replication and sharding explained

-

Combined replication and sharding in practice